I’m about to tell you something that’s going to piss you off. Actually, scratch that—if you’re a PC gamer, you should already be pissed off. Because Nvidia just made it crystal clear: they don’t give a DAMN about you anymore.

Fresh reports confirm that Nvidia is discontinuing production of the RTX 5070 Ti and multiple 16GB VRAM models, instead focusing on pushing 8GB garbage onto consumers. And why? Because AI datacenters are eating all the memory supply, and Nvidia would rather sell you an overpriced, underpowered card than lose a single dollar of their precious AI profits.

Let’s talk about how gamers are getting absolutely screwed in the AI gold rush.

The Situation Is Worse Than You Think

Here’s what’s happening right now: Nvidia is ending production of the 16GB GPUs to focus on 8GB versions. Read that again. They’re killing off the cards that actually have enough VRAM for modern gaming, so they can push more 8GB models on you.

The RTX 5070 Ti? The “Goldilocks GPU” that reviewers loved for hitting the sweet spot of performance and price? Dead. Gone. Discontinued before it even had its first birthday. ASUS has already confirmed they’ve halted RTX 5070 Ti production and have no idea when—or if—supply will resume.

Oh, and it gets better. Nvidia plans to slash RTX 50-series GPU production by up to 40% in early 2026 due to GDDR7 memory shortages. So not only are they killing the good cards, they’re making fewer cards overall. Supply down, demand up—you know what that means for prices.

Why AI Is Ruining PC Gaming

Let me paint you a picture of what’s actually happening here. AI is projected to consume nearly 20% of global DRAM wafer capacity in 2026. Twenty percent! That’s memory that could be going into your gaming PC, your graphics cards, your system RAM. Instead, it’s being stuffed into data centers so tech companies can train ChatGPT to write better poetry or whatever.

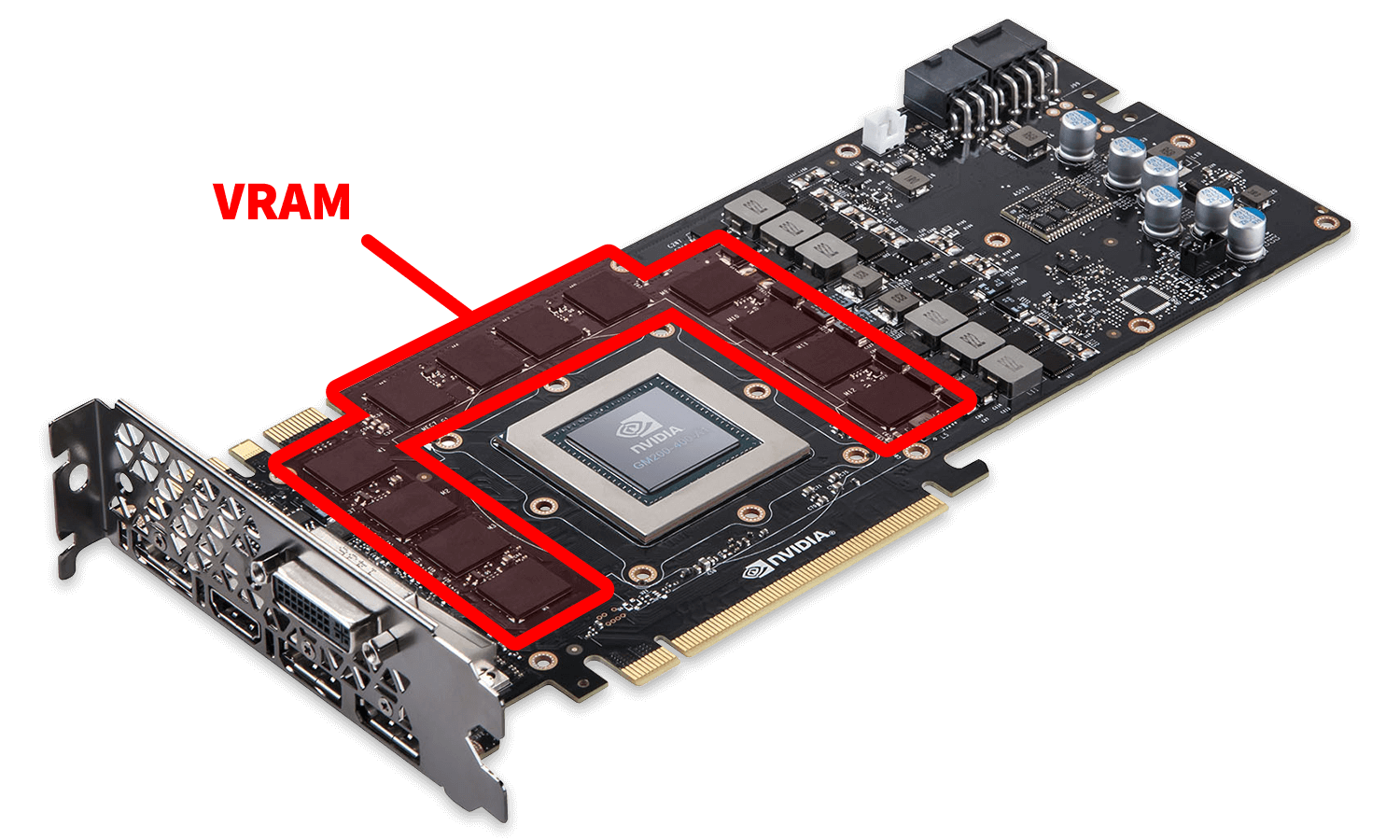

Here’s the kicker: 1GB of HBM (the memory AI chips use) consumes 4 times the manufacturing capacity of standard DRAM, while GDDR7 requires 1.7 times more. So every AI chip they manufacture eats up resources that could make multiple consumer products. But Nvidia doesn’t care, because those AI chips sell for tens of thousands of dollars with massive profit margins.

Memory manufacturers like Samsung, SK Hynix, and Micron have straight-up said: Micron’s entire 2026 HBM output is already committed, and every wafer devoted to HBM is one not available for DRAM. They’re not even pretending to prioritize gamers anymore. The CEO of Phison Electronics—Taiwan’s largest NAND controller company—dropped this bomb: “NAND will face severe shortages in the next year. I think supply will be tight for the next ten years.“

The 8GB VRAM Insult

Now let’s address the elephant in the room: 8GB of VRAM in 2026 is a joke. It’s insulting. It’s Nvidia treating you like an idiot and hoping you won’t notice.

In 2025, Nvidia is launching an 8GB variant of the RTX 5060 Ti for almost $400, and the 8GB RTX 5060 for $299. Four hundred dollars for 8GB of VRAM. That’s highway robbery with a smile.

Here’s the reality: 8GB of VRAM is insultingly low for today’s games, even at 1080p, thanks to larger-than-ever texture sizes and complex ray tracing effects. You can literally compare the 16GB and 8GB variants of the RTX 5060 Ti side by side—the 8GB version crashes or drops below 30 FPS in modern games while the 16GB variant handles them fine.

AMD and Intel have been shipping 16GB cards in the same price range for years. Intel’s Arc B580? 12GB for budget-friendly 1440p gaming. AMD’s offerings? Loaded with VRAM. But Nvidia? They’re banking on brand loyalty and consumer ignorance to sell you cards that’ll be obsolete before you finish paying them off.

And before you say “but DLSS fixes everything!”—no. Frame generation is a band-aid, not a cure. It can’t magically create VRAM that doesn’t exist when textures won’t load or games crash because you ran out of memory.

Nvidia’s Priorities Are Crystal Clear

Let’s be brutally honest about what’s happening here. Jensen Huang himself said it: Nvidia has “evolved from a gaming GPU company to now an AI data center infrastructure company.” That’s not him setting a vision for the future—that’s him describing what’s already happened.

Gaming is legacy business. Data centers are the future. And Nvidia is allocating every resource toward maximizing AI revenue while gaming withers from strategic neglect.

Think about it from their perspective: why would they manufacture a $500 RTX 5060 Ti with 16GB when that same memory could go into a $1,000+ RTX 5080 with double the profit margin? Or better yet, into an AI datacenter chip that sells for $30,000+ with multi-year guaranteed contracts?

The answer is: they wouldn’t. And they aren’t.

The Price Apocalypse Is Coming

Oh, you thought we were done? Nah, we’re just getting started with the bad news.

Memory prices have gone absolutely insane. A 64GB DDR5 kit that cost around $195 just weeks ago has ballooned to $788 in some markets. Some retailers are reporting price increases of 100% or more. And it’s not slowing down—analysts say memory prices won’t peak until at least late 2026, maybe 2027.

DRAM and GDDR memory now accounts for up to 80% of a GPU’s bill of materials. That means when memory costs go up, GPU prices have to go up. Reports indicate both AMD and Nvidia are planning phased price increases throughout 2026, with some models potentially doubling in MSRP.

We’re already seeing the RTX 5090 hit $5,000 in some markets. The RTX 5070 that sells for $550 today? Expect $700+ by summer 2026, if you can even find one. And remember, they’re cutting production by 40%, so good luck with availability.

ASUS already confirmed price increases starting January 5, 2026. Other manufacturers will follow. This is happening right now, not some distant future scenario.

GPU Model | MSRP (Launch) | Approx. Oct/Nov 2025 Avg/Low | Approx. Jan 2026 Avg/Low | Trend (Past Few Months) | Notes |

|---|---|---|---|---|---|

RTX 5090 | $1,999 | $2,000–$2,500 (early post-launch) | $3,000–$5,000+ (some listings) | Sharp rise (premiums/scalping) | Heaviest impact from memory shortages & demand – price gouging at its finest |

RTX 5080 | ~$999–$1,200 | ~$1,100–$1,300 | $1,200–$1,600+ | Moderate upward | Following high-end pressure |

RTX 5070 / 5070 Ti | $549–$749 | ~$570–$800 | $600–$850+ | Slight increase | Stable-ish but edging up |

RTX 4080 (previous gen) | $1,199 | ~$1,100–$1,200 | ~$1,500–$1,800 (some) | Up slightly | Older stock rising in spots |

RTX 4070 / 4070 Super | $549–$599 | ~$500–$600 | ~$550–$650 | Stable to minor up | Mid-range holding better |

RX 9070 XT (AMD) | $599 | ~$600–$700 | $620–$750+ | Small rise (~$20–$50) | AMD’s initial VRAM-based hike |

RX 9070 | $549 | ~$550–$650 | $570–$700+ | Small rise | Similar to above |

Nvidia Might Not Even Give Partners VRAM Anymore

Just when you thought it couldn’t get worse, there are reports that Nvidia has stopped bundling VRAM with the GPUs it sells to board partners, leaving them to secure memory on their own.

Seriously!?. Nvidia might start shipping just the GPU die to manufacturers and saying “good luck finding memory!” This would absolutely devastate smaller board partners who don’t have the purchasing power to secure memory at scale. Fewer manufacturers means less competition, which means even higher prices for you.

If Nvidia goes ahead with this move to stop bundling VRAM, smaller partners—already operating on razor-thin margins—would feel the real pressure. The big guys like ASUS and MSI will be fine. Everyone else? They might just stop making gaming cards altogether.

What This Means For You

Look, I’m not going to sugarcoat this. If you’re planning to build or upgrade a gaming PC, you’re getting screwed. Hard.

Here’s the situation:

Right now ONLY if you have to, might be the best time to buy for the foreseeable future. Current inventory represents the best availability and pricing you’ll see. That RTX 4070 or 4080? Still expensive, but they’ll look like bargains in six months. If you can hold off buying for a few years, I suggest so.

8GB GPUs are a trap. Don’t buy them. I don’t care how cheap they are, I don’t care what Nvidia’s marketing says about DLSS magic—8GB is not enough for games that are releasing right now, let alone games coming in 2027 or 2028.

Consider AMD or Intel. Yeah, I know Nvidia has the mindshare and the best ray tracing. But AMD’s RX 7900 XT still has 16GB of GDDR6 and actually performs. Intel’s Arc B580 is punching way above its weight class for budget builds. Don’t give Nvidia money for insulting you.

Pre-builts might actually be your best bet. System integrators lock in GPU inventory months in advance through volume contracts. They might have stock when DIY builders are scrambling.

The used market is about to pop off. If people can’t afford new cards, they’ll turn to used. Expect prices to rise there too.

The Bottom Line: Gamers Are Collateral Damage

Here’s the harsh truth: The company could manufacture more gaming cards if it prioritized gaming. It could allocate memory differently. It simply chooses not to, because AI data center chips generate twelve times more revenue.

Nvidia, and others, aren’t being forced to abandon gamers by market conditions. They’re making a choice. They’re choosing AI profits over the community that built them into the powerhouse they are today. Every gamer who bought a GTX 970, a GTX 1080, an RTX 2080—you built Nvidia. And now they’re kicking you to the curb because ChatGPT is more profitable.

This isn’t a temporary shortage. This isn’t bad luck. This is Nvidia systematically prioritizing AI revenue while treating gaming as a legacy business they’re slowly exiting. They’re pushing inadequate 8GB cards, discontinuing good models, cutting production, and watching prices skyrocket—all while raking in record profits from AI.

We’re watching gaming PCs become luxury items in real-time. And Nvidia is perfectly happy with that outcome as long as their AI money printer keeps going brrr.

So yeah, I’m mad. You should be mad too. This is what happens when one company has 80%+ market share and decides your needs don’t matter anymore.

Sound Off

Get down in that comment section and let me know: Are you buying a GPU now before prices get worse? Switching to AMD? Going console? I want to hear how you’re dealing with this mess. And if you work at Nvidia and want to defend this nonsense, I’m all ears.

Stay nerdy, friends. And for the love of silicon, don’t buy an 8GB GPU in 2026.