From www.tomsguide.com

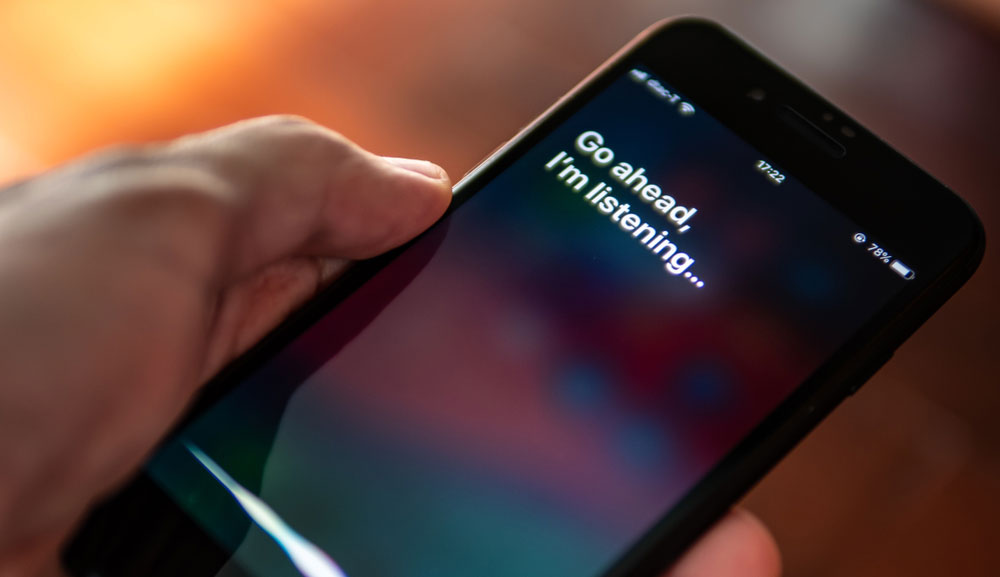

Apple has unveiled a new small language model called ReALM (Reference Resolution As Language Modeling) that is designed to run on a phone and make voice assistants like Siri smarter by helping it to understand context and ambiguous references.

This comes ahead of the launch of iOS 18 in June at WWDC 2024, where we expect a big push behind a new Siri 2.0, though it’s not clear if this model will be integrated into Siri in time.

This isn’t the first foray into the artificial intelligence space for Apple in the past few months, with a mixture of new models, tools to boost efficiency of AI on small devices and partnerships, all painting a picture of a company ready to make AI the center piece of its business.

ReALM is the latest announcement from Apple’s rapidly growing AI research team and the first to focus specifically on improving existing models, making them faster, smarter and more efficient. The company claims it even outperforms OpenAI’s GPT-4 on certain tasks.

Details were released in a new open research paper from Apple published on Friday and first reported by Venture Beat on Monday. Apple hasn’t commented on the the research or whether it will actually be part of iOS 18 yet.

What does ReALM mean for Apple’s AI effort?

Apple seems to be taking a “throw everything at it and see what sticks” approach to AI at the moment. There are rumors of partnerships with Google, Baidu and even OpenAI. The company has put out impressive models and tools to make running AI locally easier.

The iPhone maker has been working on AI research for more than a decade, with much of it hidden away inside apps or services. It wasn’t until the release of the most recent cohort of MacBooks that Apple started to use the letters AI in its marketing — that will only increase.

A lot of the research has focused on ways to run AI models locally, without relying on sending large amounts of data to be processed in the cloud. This is both essential to keep the cost of running AI applications down as well as meeting Apple’s strict privacy requirements.

How does ReALM work?

ReALM is tiny compared to models like GPT-4. but that is because it doesn’t have to do everything. Its purpose is to provide context to other AI models like Siri.

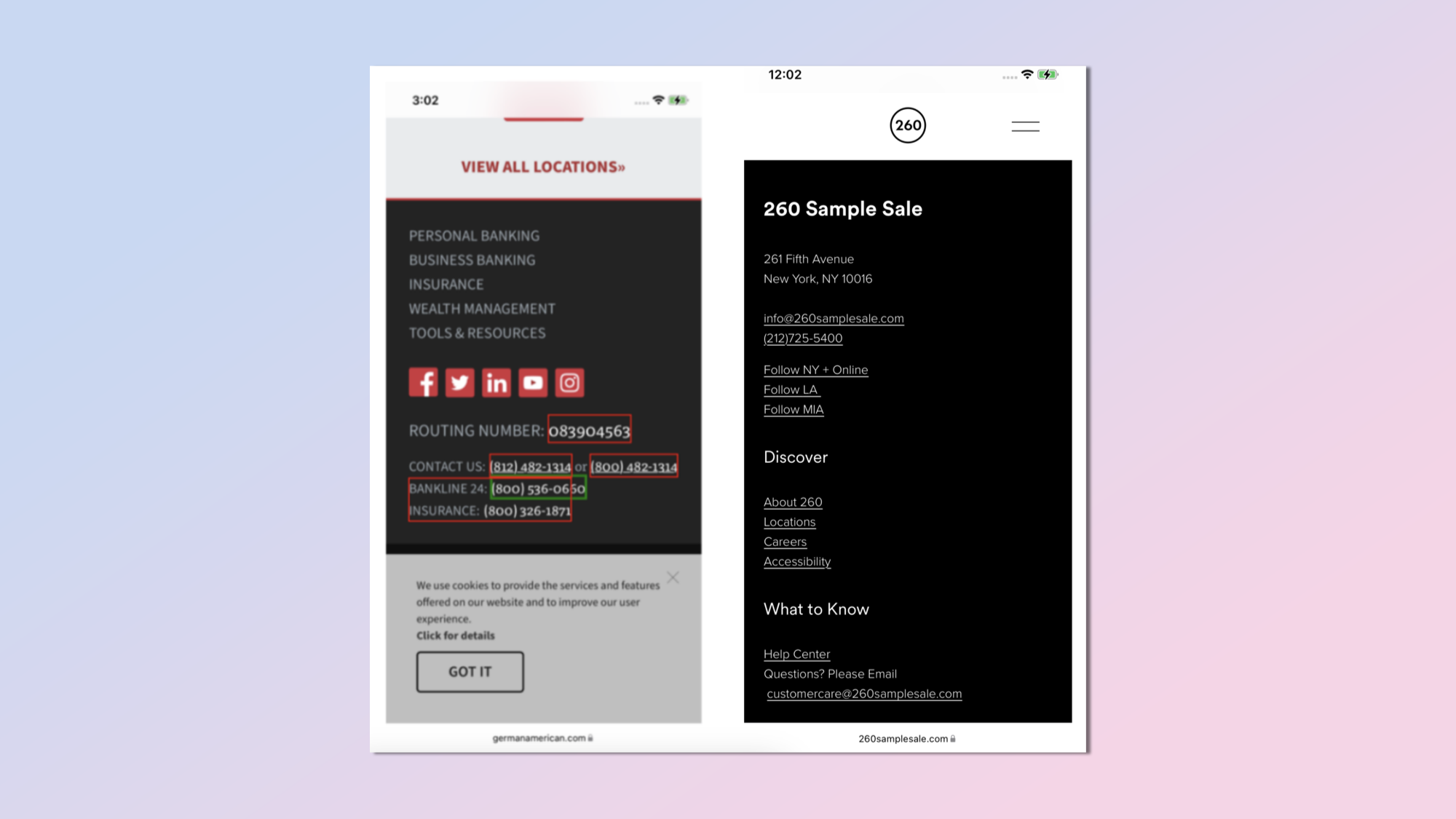

It is a visual model that reconstructs the screen and labels each on-screen entity and its location. This creates a text-based representation of the visual layout which can be passed on to the voice assistant to provide it context clues for user requests.

In terms of accuracy, Apple says ReALM performs as well as GPT-4 on a number of key metrics despite being smaller and faster.

“We especially wish to highlight the gains on onscreen datasets, and find that our model with the textual encoding approach is able to perform almost as well as GPT-4 despite the latter being provided with screenshots,” the authors wrote.

What this means for Siri

What this means is that if a future version of ReALM is deployed to Siri — or even this version — then Siri will have a better understanding of what user means when they tell it to open this app, or can you tell me what this word means in an image.

It would also give Siri more conversational abilities without having to fully deploy a large language model on the scale of Gemini.

When tied to other recent Apple research papers that allow for “one shot” responses — where the AI can get the answer from a single prompt — it is a sign Apple is still investing heavily in the AI assistant space and not just relying on outside models.

More from Tom’s Guide

[ For more curated Apple news, check out the main news page here]

The post Apple reveals ReALM — new AI model could make Siri way faster and smarter first appeared on www.tomsguide.com

/cdn.vox-cdn.com/uploads/chorus_asset/file/25546607/image__21_.png)