From 9to5mac.com

Instagram is rolling out a new safety feature, designed to protect teens from being exposed to unwanted nude photos in Instagram direct messages. The system will blur nude photos in Instagram DMs when the recipient is believed to be a teenager.

The safety measure is designed to protect against three problems, including the growing problem of ‘sextortion’ on the platform …

Instagram parent company Meta is aiming to address three problems on the platform.

First, teenagers may be sent unsolicited nude photos they have no wish to see.

Second, sending someone a nude photo of a teenager is often illegal – and that may apply even if teenagers send photos of themselves.

Third, thousands of teenage boys have been caught up in so-called sextortion scams, or sexual extortion. They will be contacted by an account seemingly belonging to an attractive teenage girl, who will flirt with them online before later sending nudes, and requesting that the boy send some of him in response. Once he does so, he will be threatened with exposure, and blackmailed – often into sending increasingly explicit photos.

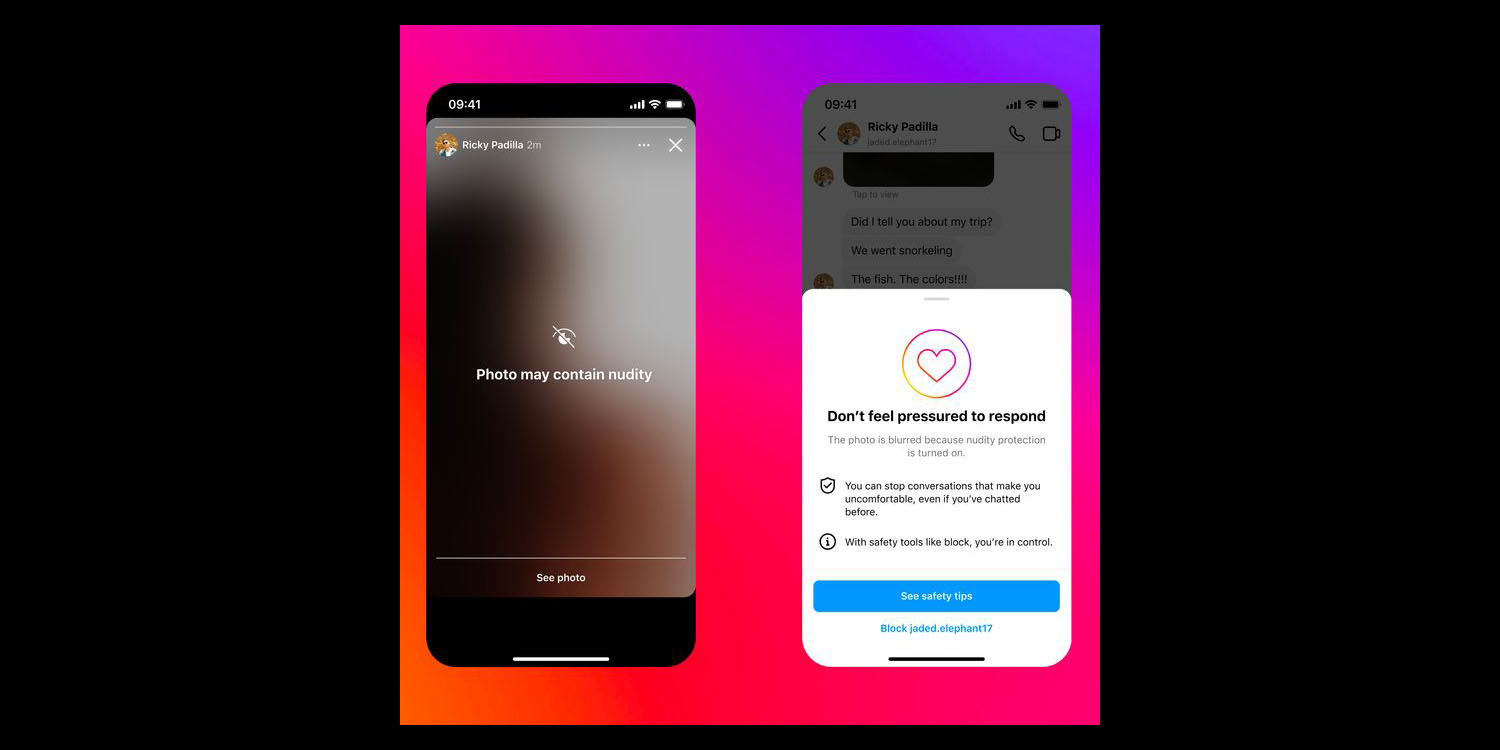

The Wall Street Journal reports that Meta is now automatically detecting nude photos in DM. If the recipient’s date of birth indicates that they are a teenager, then the photo will be blurred and a warning displayed.

Instagram users who receive nude images via direct messages will see a pop-up explaining how to block the sender or report the chat, and a note encouraging the recipient not to feel pressure to respond. People who attempt to send a nude via direct messages will be advised to be cautious and receive a reminder that they can unsend a pic.

The feature will be on by default for teenage accounts, though they will have the option to disable it. For adult accounts, the reverse will be true: the feature will be off by default, but they will be able to choose to enable it.

The rollout appears to be a slow one, with the company saying that it is being tested over the next few weeks, and will then roll out globally “over the next few months.”

The report says Meta does not plan to extend the protections to Facebook Messenger or WhatsApp. The company says that this is because it sees the platform as the biggest risk.

We want to respond to where we see the biggest need and relevance – which, when it comes to unwanted nudity and educating teens on the risks of sharing sensitive images – we think is on Instagram DMs, so that’s where we’re focusing first.

WhatsApp is a different service and used in different ways. It’s primarily designed for private conversations with people you already know. You can’t search for other users and you need to know someone’s phone number to contact them.

Add 9to5Mac to your Google News feed.

FTC: We use income earning auto affiliate links. More.

[ For more curated tech news, check out the main news page here]

The post Meta protecting teens by blurring nude photos in Instagram DMs first appeared on 9to5mac.com