From www.techspot.com

Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

Forward-looking: It’s no secret that Nvidia has been the dominant GPU supplier to data centers, but now there is a very real possibility that AMD might become a serious contender in this market as demand grows. AMD was recently approached by a client asking to create an AI training cluster consisting of a staggering 1.2 million GPUs. That would potentially make it 30x more powerful than Frontier, the current fastest supercomputer. AMD supplied less than 2% of data center GPUs in 2023.

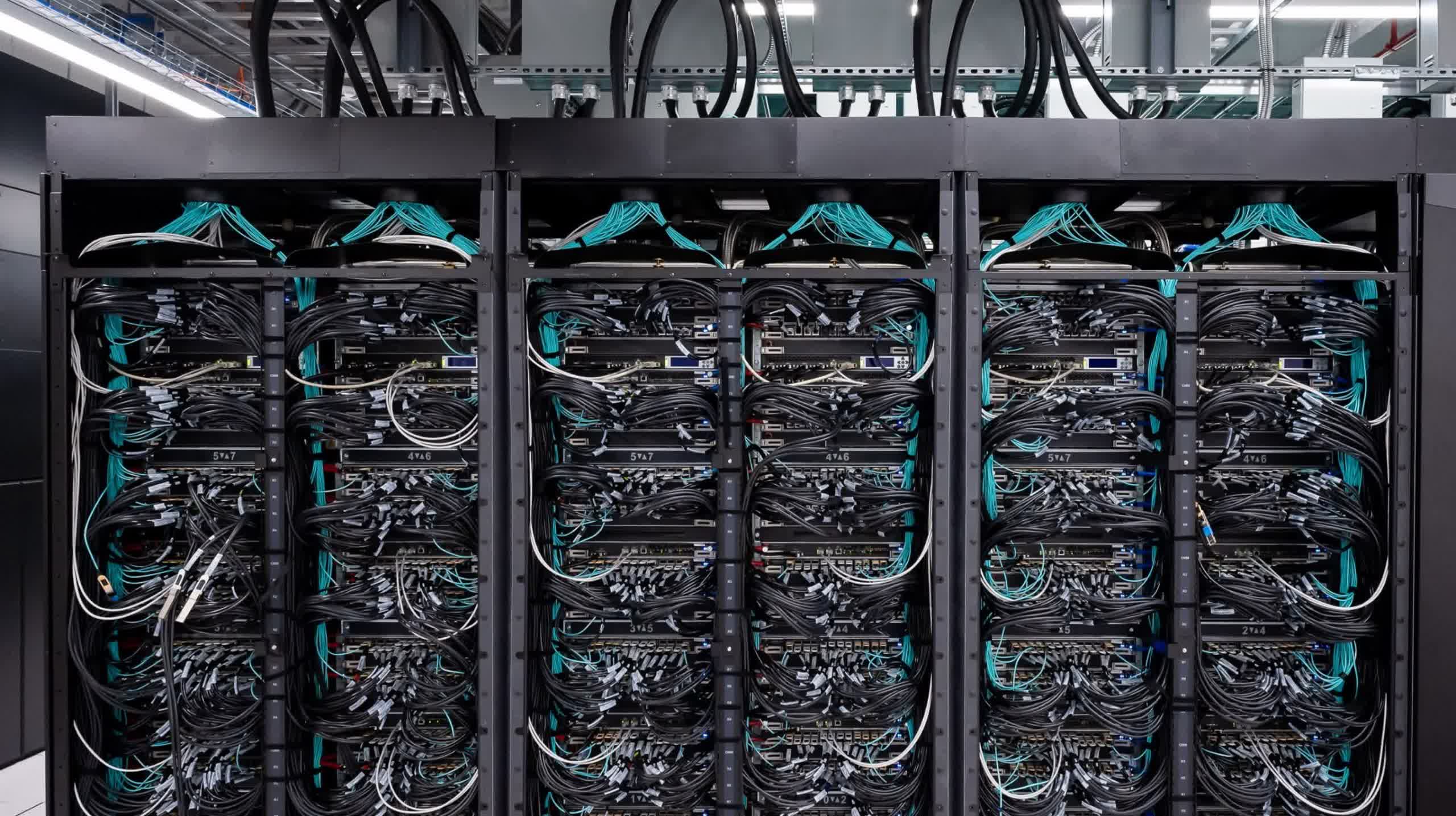

In an interview with The Next Platform, Forrest Norrod, AMD’s GM of Datacenter Solutions revealed that they had received genuine inquiries from clients to build AI training clusters using 1.2 million GPUs. To put that into perspective, current AI training clusters are typically created using a few thousand GPUs connected via high-speed interconnect across several local server racks.

The scale being considered for AI development now is unprecedented. “Some of the training clusters that are being contemplated are truly mind-boggling,” Norrod said. In fact, the largest known supercomputer used to train AI models is Frontier, which has 37,888 Radeon GPUs, making AMD’s potential supercomputer 30 times more powerful than Frontier.

Of course, it’s not that simple. Even at current power levels, there’s a plethora of pitfalls to consider when creating AI training clusters. AI training requires low latency to deliver prompt results, uses significant amounts of power, and hardware failures must be taken into consideration – even with just a few thousand GPUs.

Most servers run at around 20% utilization and handle thousands of small, asynchronous jobs in remote machines. However, the rise of AI training is leading to a significant change in server structure. To keep up with machine learning models and algorithms, an AI data center must be equipped with vast amounts of computing power specially designed for the job. AI training is essentially one large, synchronous job that requires every node in the cluster to pass information back and forth as fast as possible.

What’s most interesting is that these figures are coming from AMD, which accounted for less than 2% of the data center GPU shipments in 2023. Nvidia, which made up the other 98%, has remained tight-lipped about what its clients have asked it to create. As the market leader, we can only imagine what they’re working on.

While the proposed 1.2 million GPU supercomputer might seem outlandish, Norrod said that “very sober people” are considering spending up to one hundred billion dollars on AI training clusters. This shouldn’t come as a shock, as the past few years in the tech world have been defined by the explosion in AI advancements. It seems that companies are ready to invest significant sums in AI and machine learning to remain competitive.

[ For more curated Computing news, check out the main news page here]

The post AMD approached to make world’s fastest AI supercomputer powered by 1.2 million GPU first appeared on www.techspot.com